In July 19, Meta released Llama2. The second day, Chinese Llama2 was released, the project can be found at:

https://github.com/LinkSoul-AI/Chinese-Llama-2-7b

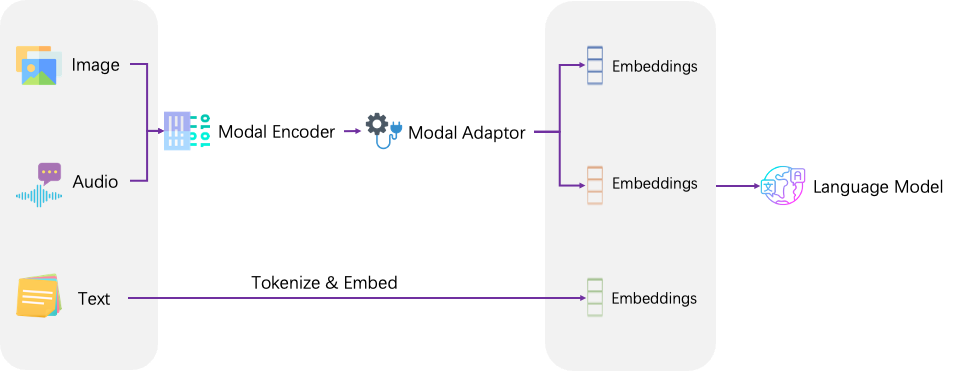

More impressively, it also comes with a multimodal version, called LlaVA, that also to talk to image and audio. It unifies the embeddings of text, audio and image as shown below:

The github of Chinese LlaVA can be found at: