Large language models like GPT-4 have taken the world by storm thanks to their astonishing command of natural language. Yet the most significant long-term opportunity for LLMs will entail an entirely different type of language: the language of biology.

One striking theme has emerged from the long march of research progress across biochemistry, molecular biology and genetics over the past century: it turns out that biology is a decipherable, programmable, in some ways even digital system.

DNA encodes the complete genetic instructions for every living organism on earth using just four variables—A (adenine), C (cytosine), G (guanine) and T (thymine). Compare this to modern computing systems, which use two variables—0 and 1—to encode all the world’s digital electronic information. One system is binary and the other is quaternary, but the two have a surprising amount of conceptual overlap; both systems can properly be thought of as digital.

To take another example, every protein in every living being consists of and is defined by a one-dimensional string of amino acids linked together in a particular order. Proteins range from a few dozen to several thousand amino acids in length, with 20 different amino acids to choose from.

This, too, represents an eminently computable system, one that language models are well-suited to learn.

As DeepMind CEO/cofounder Demis Hassabis put it: “At its most fundamental level, I think biology can be thought of as an information processing system, albeit an extraordinarily complex and dynamic one. Just as mathematics turned out to be the right description language for physics, biology may turn out to be the perfect type of regime for the application of AI.”

Large language models are at their most powerful when they can feast on vast volumes of signal-rich data, inferring latent patterns and deep structure that go well beyond the capacity of any human to absorb. They can then use this intricate understanding of the subject matter to generate novel, breathtakingly sophisticated output.

By ingesting all of the text on the internet, for instance, tools like ChatGPT have learned to converse with thoughtfulness and nuance on any imaginable topic. By ingesting billions of images, text-to-image models like Midjourney have learned to produce creative original imagery on demand.

Pointing large language models at biological data—enabling them to learn the language of life—will unlock possibilities that will make natural language and images seem almost trivial by comparison.

What, concretely, will this look like?

In the near term, the most compelling opportunity to apply large language models in the life sciences is to design novel proteins.

Proteins 101

Proteins are at the center of life itself. As prominent biologist Arthur Lesk put it, “In the drama of life at a molecular scale, proteins are where the action is.”

Proteins are involved in virtually every important activity that happens inside every living thing: digesting food, contracting muscles, moving oxygen throughout the body, attacking foreign viruses. Your hormones are made out of proteins; so is your hair.

Proteins are so important because they are so versatile. They are able to undertake a vast array of different structures and functions, far more than any other type of biomolecule. This incredible versatility is a direct consequence of how proteins are built.

As mentioned above, every protein consists of a string of building blocks known as amino acids strung together in a particular order. Based on this one-dimensional amino acid sequence, proteins fold into complex three-dimensional shapes that enable them to carry out their biological functions.

A protein’s shape relates closely to its function. To take one example, antibody proteins fold into shapes that enable them to precisely identify and target foreign bodies, like a key fitting into a lock. As another example, enzymes—proteins that speed up biochemical reactions—are specifically shaped to bind with particular molecules and thus catalyze particular reactions. Understanding the shapes that proteins fold into is thus essential to understanding how organisms function, and ultimately how life itself works.

Determining a protein’s three-dimensional structure based solely on its one-dimensional amino acid sequence has stood as a grand challenge in the field of biology for over half a century. Referred to as the “protein folding problem,” it has stumped generations of scientists. One commentator in 2007 described the protein folding problem as “one of the most important yet unsolved issues of modern science.”

Deep Learning And Proteins: A Match Made In Heaven

In late 2020, in a watershed moment in both biology and computing, an AI system called AlphaFold produced a solution to the protein folding problem. Built by Alphabet’s DeepMind, AlphaFold correctly predicted proteins’ three-dimensional shapes to within the width of about one atom, far outperforming any other method that humans had ever devised.

It is hard to overstate AlphaFold’s significance. Long-time protein folding expert John Moult summed it up well: “This is the first time a serious scientific problem has been solved by AI.”

Yet when it comes to AI and proteins, AlphaFold was just the beginning.

AlphaFold was not built using large language models. It relies on an older bioinformatics construct called multiple sequence alignment (MSA), in which a protein’s sequence is compared to evolutionarily similar proteins in order to deduce its structure.

MSA can be powerful, as AlphaFold made clear, but it has limitations.

For one, it is slow and compute-intensive because it needs to reference many different protein sequences in order to determine any one protein’s structure. More importantly, because MSA requires the existence of numerous evolutionarily and structurally similar proteins in order to reason about a new protein sequence, it is of limited use for so-called “orphan proteins”—proteins with few or no close analogues. Such orphan proteins represent roughly 20% of all known protein sequences.

Recently, researchers have begun to explore an intriguing alternative approach: using large language models, rather than multiple sequence alignment, to predict protein structures.

“Protein language models”—LLMs trained not on English words but rather on protein sequences—have demonstrated an astonishing ability to intuit the complex patterns and interrelationships between protein sequence, structure and function: say, how changing certain amino acids in certain parts of a protein’s sequence will affect the shape that the protein folds into. Protein language models are able to, if you will, learn the grammar or linguistics of proteins.

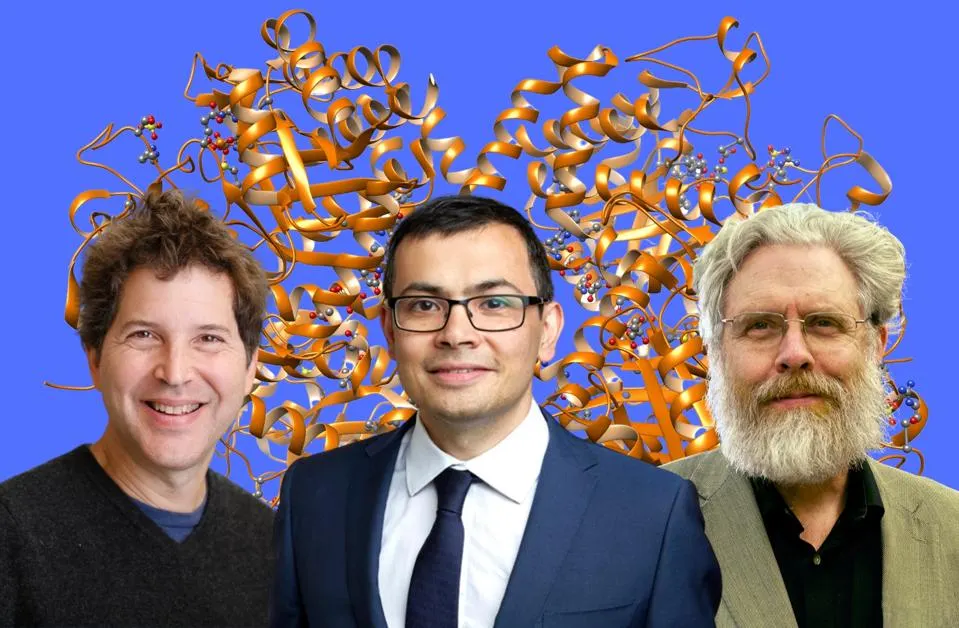

The idea of a protein language model dates back to the 2019 UniRep work out of George Church’s lab at Harvard (though UniRep used LSTMs rather than today’s state-of-the-art transformer models).

In late 2022, Meta debuted ESM-2 and ESMFold, one of the largest and most sophisticated protein language models published to date, weighing in at 15 billion parameters. (ESM-2 is the LLM itself; ESMFold is its associated structure prediction tool.)

ESM-2/ESMFold is about as accurate as AlphaFold at predicting proteins’ three-dimensional structures. But unlike AlphaFold, it is able to generate a structure based on a single protein sequence, without requiring any structural information as input. As a result, it is up to 60 times faster than AlphaFold. When researchers are looking to screen millions of protein sequences at once in a protein engineering workflow, this speed advantage makes a huge difference. ESMFold can also produce more accurate structure predictions than AlphaFold for orphan proteins that lack evolutionarily similar analogues.

Language models’ ability to develop a generalized understanding of the “latent space” of proteins opens up exciting possibilities in protein science.

But an even more powerful conceptual advance has taken place in the years since AlphaFold.

In short, these protein models can be inverted: rather than predicting a protein’s structure based on its sequence, models like ESM-2 can be reversed and used to generate totally novel protein sequences that do not exist in nature based on desired properties.

Inventing New Proteins

All the proteins that exist in the world today represent but an infinitesimally tiny fraction of all the proteins that could theoretically exist. Herein lies the opportunity.

To give some rough numbers: the total set of proteins that exist in the human body—the so-called “human proteome”—is estimated to number somewhere between 80,000 and 400,000 proteins. Meanwhile, the number of proteins that could theoretically exist is in the neighborhood of 10^1,300—an unfathomably large number, many times greater than the number of atoms in the universe. (To be clear, not all of these 10^1,300 possible amino acid combinations would result in biologically viable proteins. Far from it. But some subset would.)

Over many millions of years, the meandering process of evolution has stumbled upon tens or hundreds of thousands of these viable combinations. But this is merely the tip of the iceberg.

In the words of Molly Gibson, cofounder of leading protein AI startup Generate Biomedicines: “The amount of sequence space that nature has sampled through the history of life would equate to almost just a drop of water in all of Earth’s oceans.”

An opportunity exists for us to improve upon nature. After all, as powerful of a force as it is, evolution by natural selection is not all-seeing; it does not plan ahead; it does not reason or optimize in top-down fashion. It unfolds randomly and opportunistically, propagating combinations that happen to work.

Using AI, we can for the first time systematically and comprehensively explore the vast uncharted realms of protein space in order to design proteins unlike anything that has ever existed in nature, purpose-built for our medical and commercial needs.

We will be able to design new protein therapeutics to address the full gamut of human illness—from cancer to autoimmune diseases, from diabetes to neurodegenerative disorders. Looking beyond medicine, we will be able to create new classes of proteins with transformative applications in agriculture, industrials, materials science, environmental remediation and beyond.

Some early efforts to use deep learning for de novo protein design have not made use of large language models.

One prominent example is ProteinMPNN, which came out of David Baker’s world-renowned lab at the University of Washington. Rather than using LLMs, the ProteinMPNN architecture relies heavily on protein structure data in order to generate novel proteins.

The Baker lab more recently published RFdiffusion, a more advanced and generalized protein design model. As its name suggests, RFdiffusion is built using diffusion models, the same AI technique that powers text-to-image models like Midjourney and Stable Diffusion. RFdiffusion can generate novel, customizable protein “backbones”—that is, proteins’ overall structural scaffoldings—onto which sequences can then be layered.

Structure-focused models like ProteinMPNN and RFdiffusion are impressive achievements that have advanced the state of the art in AI-based protein design. Yet we may be on the cusp of a new step-change in the field, thanks to the transformative capabilities of large language models.

Why are language models such a promising path forward compared to other computational approaches to protein design? One key reason: scaling.

Scaling Laws

One of the key forces behind the dramatic recent progress in artificial intelligence is so-called “scaling laws”: the fact that almost unbelievable improvements in performance result from continued increases in LLM parameter count, training data and compute.

At each order-of-magnitude increase in scale, language models have demonstrated remarkable, unexpected, emergent new capabilities that transcend what was possible at smaller scales.

It is OpenAI’s commitment to the principle of scaling, more than anything else, that has catapulted the organization to the forefront of the field of artificial intelligence in recent years. As they moved from GPT-2 to GPT-3 to GPT-4 and beyond, OpenAI has built larger models, deployed more compute and trained on larger datasets than any other group in the world, unlocking stunning and unprecedented AI capabilities.

How are scaling laws relevant in the realm of proteins?

Thanks to scientific breakthroughs that have made gene sequencing vastly cheaper and more accessible over the past two decades, the amount of DNA and thus protein sequence data available to train AI models is growing exponentially, far outpacing protein structure data.

Protein sequence data can be tokenized and for all intents and purposes treated as textual data; after all, it consists of linear strings of amino acids in a certain order, like words in a sentence. Large language models can be trained solely on protein sequences to develop a nuanced understanding of protein structure and biology.

This domain is thus ripe for massive scaling efforts powered by LLMs—efforts that may result in astonishing emergent insights and capabilities in protein science.

The first work to use transformer-based LLMs to design de novo proteins was ProGen, published by Salesforce Research in 2020. The original ProGen model was 1.2 billion parameters.

Ali Madani, the lead researcher on ProGen, has since founded a startup named Profluent Bio to advance and commercialize the state of the art in LLM-driven protein design.

While he pioneered the use of LLMs for protein design, Madani is also clear-eyed about the fact that, by themselves, off-the-shelf language models trained on raw protein sequences are not the most powerful way to tackle this challenge. Incorporating structural and functional data is essential.

“The greatest advances in protein design will be at the intersection of careful data curation from diverse sources and versatile modeling that can flexibly learn from that data,” Madani said. “This entails making use of all high-signal data at our disposal—including protein structures and functional information derived from the laboratory.”

Another intriguing early-stage startup applying LLMs to design novel protein therapeutics is Nabla Bio. Spun out of George Church’s lab at Harvard and led by the team behind UniRep, Nabla is focused specifically on antibodies. Given that 60% of all protein therapeutics today are antibodies and that the two highest-selling drugs in the world are antibody therapeutics, it is hardly a surprising choice.

Nabla has decided not to develop its own therapeutics but rather to offer its cutting-edge technology to biopharma partners as a tool to help them develop their own drugs.

Expect to see much more startup activity in this area in the months and years ahead as the world wakes up to the fact that protein design represents a massive and still underexplored field to which to apply large language models’ seemingly magical capabilities.

The Road Ahead

In her acceptance speech for the 2018 Nobel Prize in Chemistry, Frances Arnold said: “Today we can for all practical purposes read, write, and edit any sequence of DNA, but we cannot compose it. The code of life is a symphony, guiding intricate and beautiful parts performed by an untold number of players and instruments. Maybe we can cut and paste pieces from nature’s compositions, but we do not know how to write the bars for a single enzymic passage.”

As recently as five years ago, this was true.

But AI may give us the ability, for the first time in the history of life, to actually compose entirely new proteins (and their associated genetic code) from scratch, purpose-built for our needs. It is an awe-inspiring possibility.

These novel proteins will serve as therapeutics for a wide range of human illnesses, from infectious diseases to cancer; they will help make gene editing a reality; they will transform materials science; they will improve agricultural yields; they will neutralize pollutants in the environment; and so much more that we cannot yet even imagine.

The field of AI-powered—and especially LLM-powered—protein design is still nascent and unproven. Meaningful scientific, engineering, clinical and business obstacles remain. Bringing these new therapeutics and products to market will take years.

Yet over the long run, few market applications of AI hold greater promise.

In future articles, we will delve deeper into LLMs for protein design, including exploring the most compelling commercial applications for the technology as well as the complicated relationship between computational outcomes and real-world wet lab experiments.

Let’s end by zooming out. De novo protein design is not the only exciting opportunity for large language models in the life sciences.

Language models can be used to generate other classes of biomolecules, notably nucleic acids. A buzzy startup named Inceptive, for example, is applying LLMs to generate novel RNA therapeutics.

Other groups have even broader aspirations, aiming to build generalized “foundation models for biology” that can fuse diverse data types spanning genomics, protein sequences, cellular structures, epigenetic states, cell images, mass spectrometry, spatial transcriptomics and beyond.

The ultimate goal is to move beyond modeling an individual molecule like a protein to modeling proteins’ interactions with other molecules, then to modeling whole cells, then tissues, then organs—and eventually entire organisms.

The idea of building an artificial intelligence system that can understand and design every intricate detail of a complex biological system is mind-boggling. In time, this will be within our grasp.

The twentieth century was defined by fundamental advances in physics: from Albert Einstein’s theory of relativity to the discovery of quantum mechanics, from the nuclear bomb to the transistor. As many modern observers have noted, the twenty-first century is shaping up to be the century of biology. Artificial intelligence and large language models will play a central role in unlocking biology’s secrets and unleashing its possibilities in the decades ahead.

Buckle up.